Table of contents

We were running one database on Azure Microsoft SQL Server and the size was around 600GB. There are less frequent read-write operations. That is costing us $700 each month.

Our services

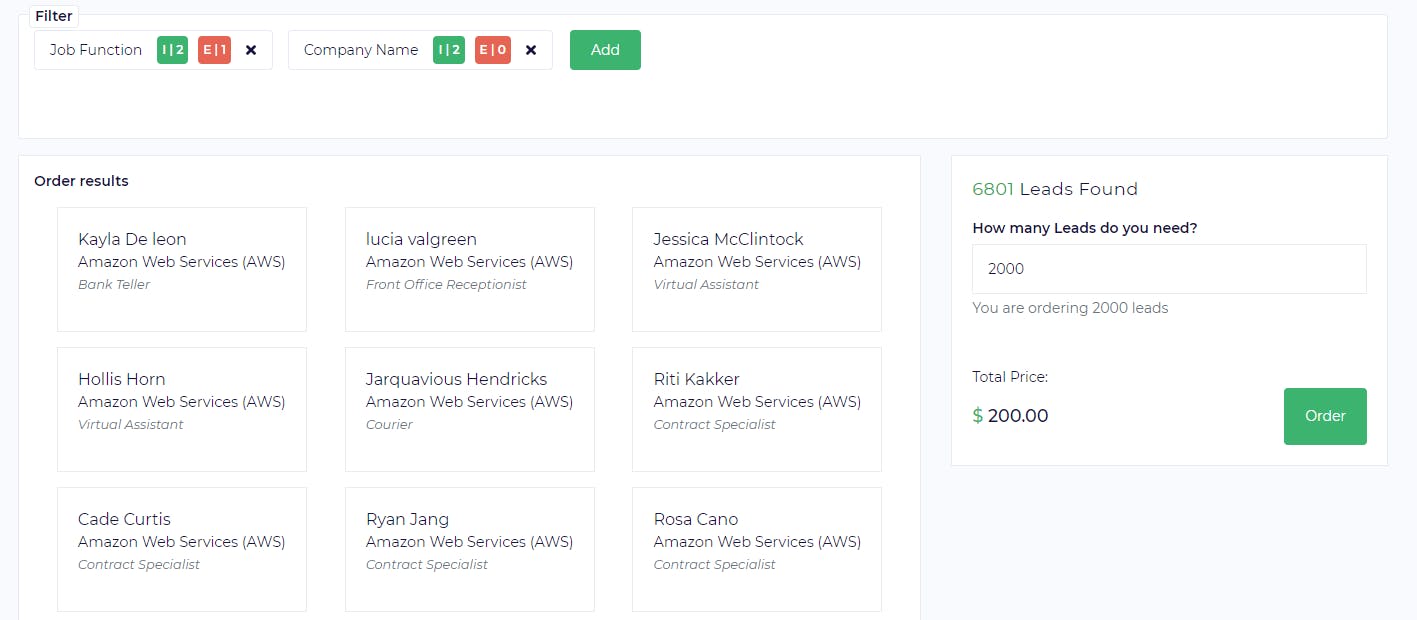

We are providing a service to our users where they can generate a business list from our database. We are providing a wide range of filters based on that users can search by Industry/Department/Company/Location/Job Role/Job Profile and purchase list from us. We provide verified(valid) emails to them.

Simple User Interface and simple solution as we are not solving a big problem here but when we talk about the solution there are lots of parts/flow of the system. We have to provide quick search functionality and ElasticSeach is our best friend here then the magic begins and we look for the people in our web universe and find the emails and validate those using some of our partners.

Once we have the emails we send those to users to download and update the latest email address in our DATABASE. We have one Logstash script that runs overnight and syncs the data to Elasticsearch.

So we are just using this database to update the emails only when some user ordered data and the overnight scheduler is doing incremental update/synch to Elasticsearch. Just for this work, we are paying $700.

We decided to move this database to AWS. AWS RDS was the best option but if we are using managed services we have to pay more (It is less than $700 but still that will cost us money just to store and manage).

After internal discussion, we have decided to use PostgreSQL on an EC2 instance. I think now we are talking about provisioning one 2 core 4 GB configuration machine with 1 TB of data and paying $50-70 each month. so we can reduce our cost by 90%.

Question: How much is the effort when we are talking about migrating the data between different database engines. Yes, there are some challenges but if you have AWS Database Migration Services you don't have to worry about anything. I thought the same but one caveat DMS will not create the Indexes for you, surely you can add indexes after migrating the data but due to the data, it was taking a long time to apply the Indexes after migration. So I created the table and then started the migration and all worked fine after.

Let's do the migration

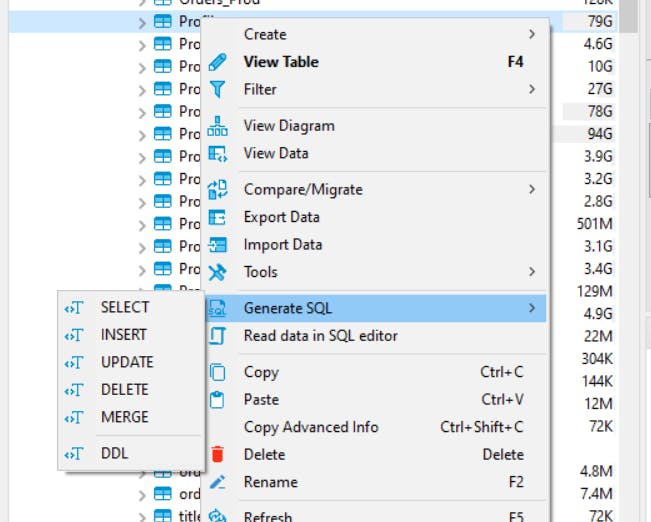

- DBeaver is my best friend as always to get the DDL for the tables or database. I did not have too many tables so I targeted each table at a time.

When you get a DDL from Microsoft SQL Server it will bring you the database.schema.table, just delete the database name from there.

For the Index convert the index to plain create index query (I like the simplicity of everything )

CREATE NONCLUSTERED INDEX Firstname ON DM.Profile ( first_name ASC )

WITH ( PAD_INDEX = OFF ,FILLFACTOR = 100 ,SORT_IN_TEMPDB = OFF , IGNORE_DUP_KEY = OFF , STATISTICS_NORECOMPUTE = OFF , ONLINE = OFF , ALLOW_ROW_LOCKS = ON , ALLOW_PAGE_LOCKS = ON )

ON [PRIMARY ]

CREATE INDEX idx_73838_firstname ON "DM"."Profile" USING btree (first_name ASC);

Login to AWS Console

Launch one EC2 instance and install PostgreSQL

Go to Database Migration Service

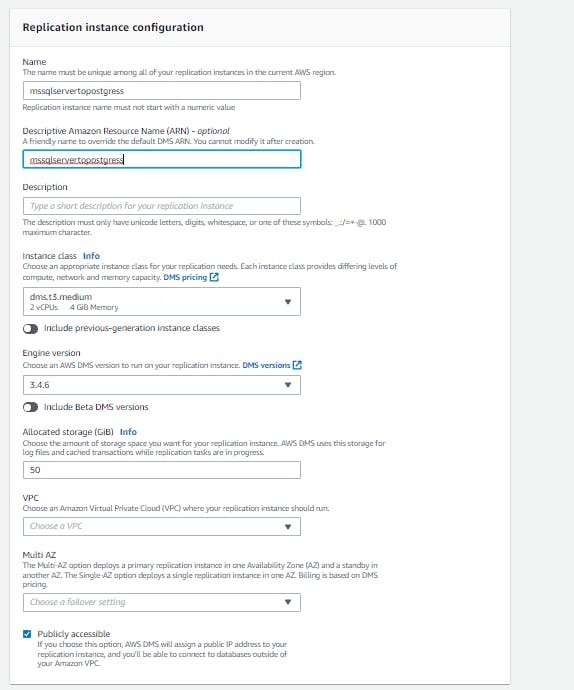

Create one Replication Instance, Everything there is obvious where you have to decide the Instance class and the storage. We have to check the publicly accessible as we are going to bring the data from Azure.

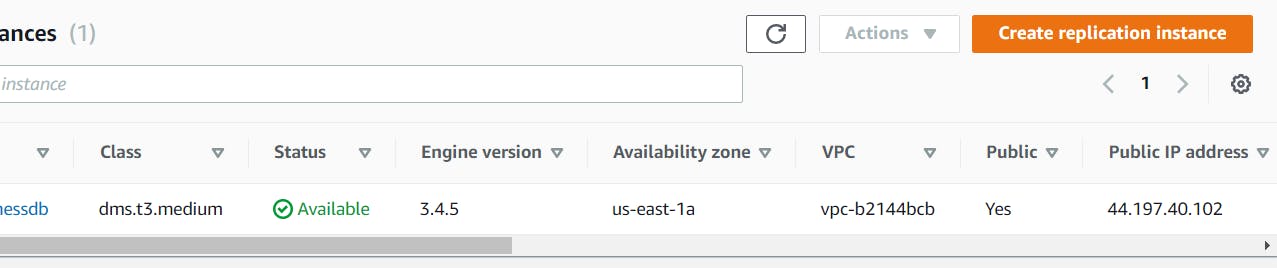

- It will take a few seconds and when ready, it will give us one public IP (this will be used in the next section to authorize access to this IP)

Next, we need to configure the endpoints but before that, we need to provide access to Replication Instance's IP from Azure, and from the EC2 Instance we have created our database.

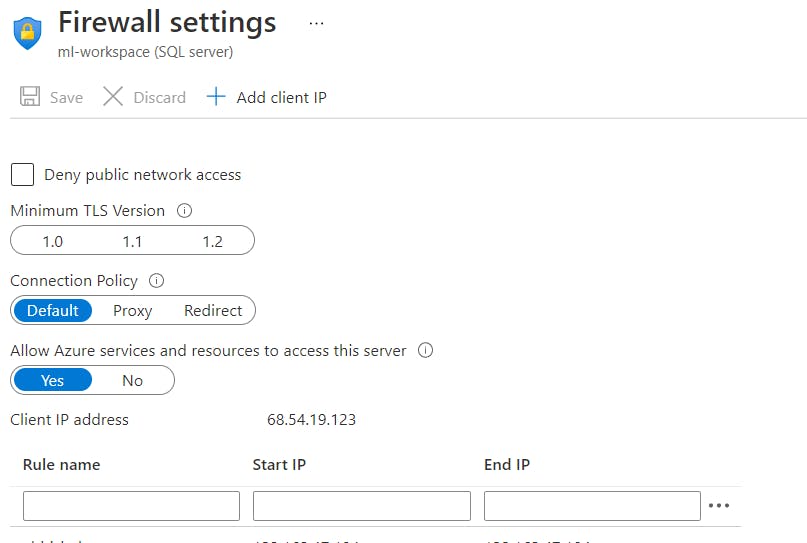

Log in to Azure and select the database and then set the server firewall and enter the Replication Instance's IP

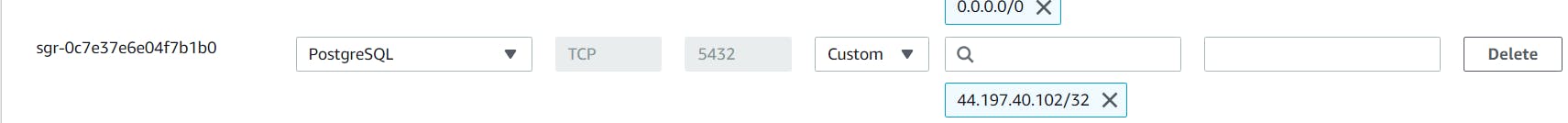

- Go to the security group associated with the EC2 Instance (where PostgreSQL was installed) and add an inbound rule with the Replication Instance's IP with port 5432

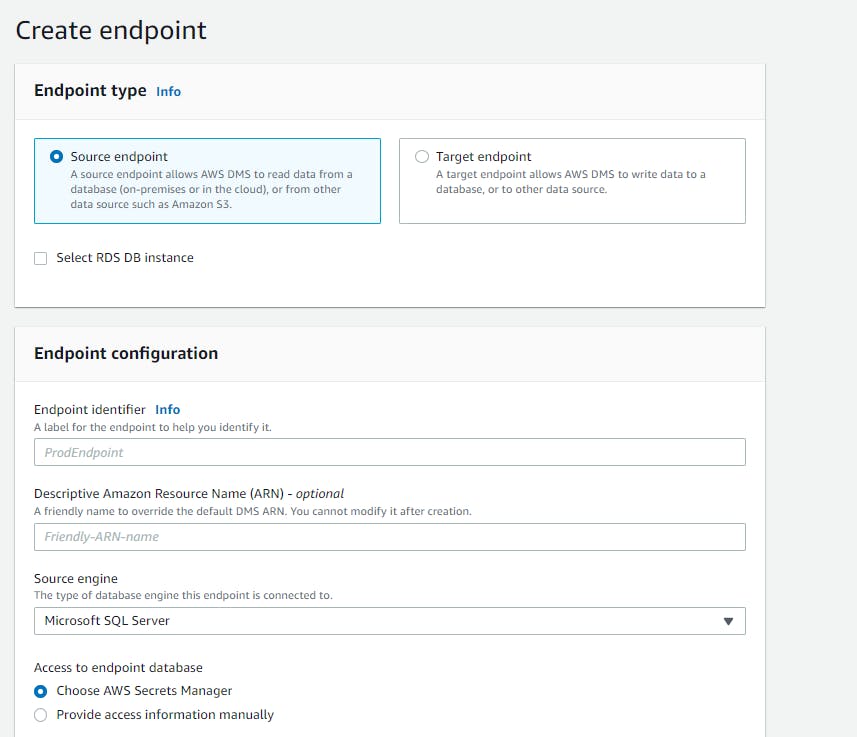

- Now we can create the Endpoints, We have to create two endpoints (source and target). To create a source endpoint select source engine as Microsoft SQL Server and either provide an AWS Secret Manager or enter the credential manually. When you are done with adding the endpoint do a test connection, irrespective of the connection is successful or not it will create the endpoint.

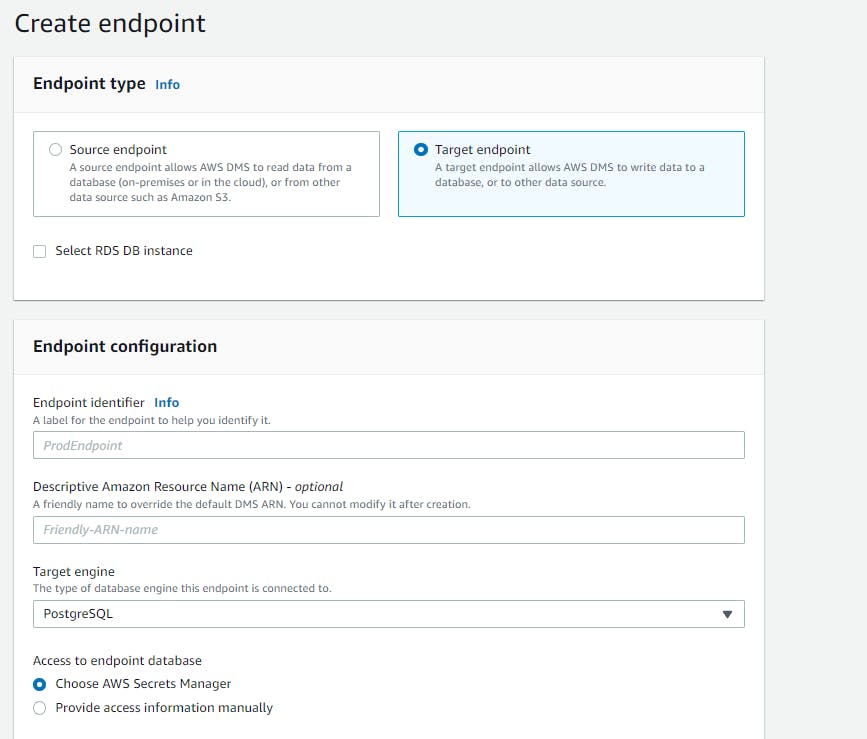

- Create a Target endpoint by selecting the Target Engine as PostgreSQL and provide the connection details. Test the connection.

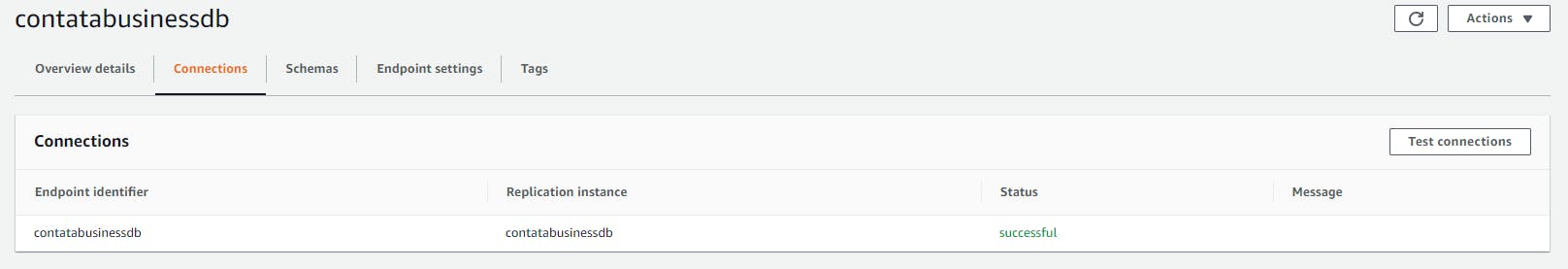

- Endpoints are ready. We can go into the details of the endpoint and under Connections, there is one status if it says failure there is some issue while creating the connection to the database, fix the issue, and then try the test connection, the status will change to testing and if all goes good it will change to successful

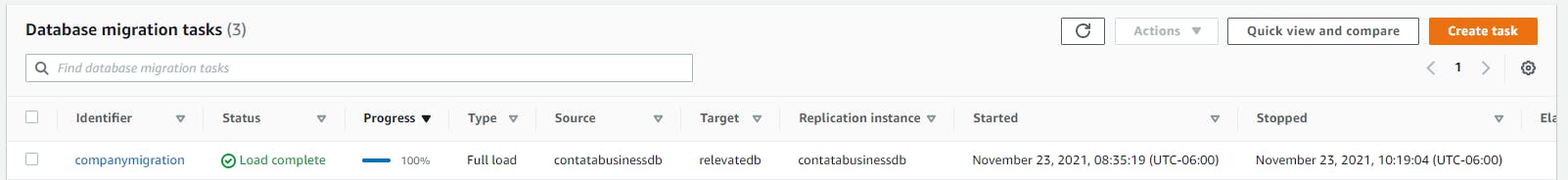

Time for seeing the magic, Click on Database Migration Tasks and create a new task select the source and target endpoints. In the migration type I have selected Migrate existing data because I need one-time migration only but there is a use case of incremental update can choose Migrate existing data and replicate ongoing changes.

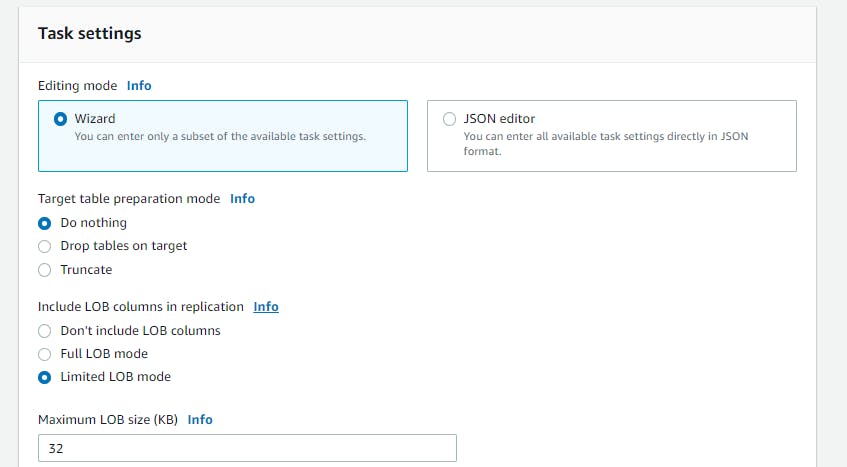

In the task settings go through the wizard and under Target table preparation mode select "do nothing" because we have already created the tables. Enable the cloudwatch logs this will help us to debug

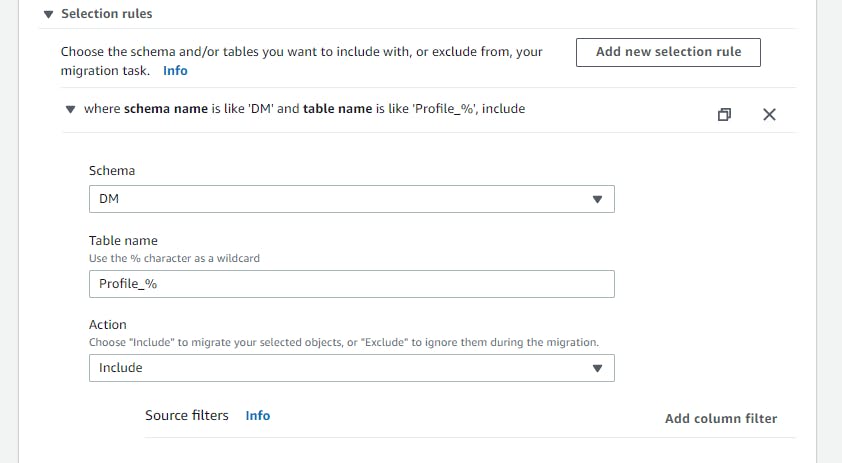

- Under table mapping we can include or exclude the tables based on our need if we want to include all can keep % as a wildcard, there is one cool feature to filter out the column level data If needed we can use it but I want a full migration I did not choose any column filter.

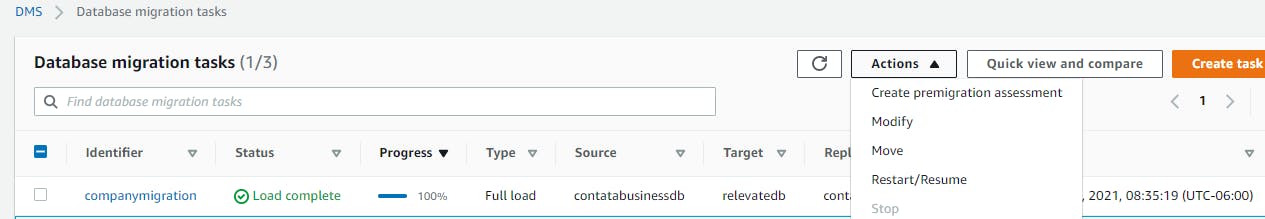

- Click on create, It will be in the ready state, and then click on restart from the tasks pages and the status will change into running state

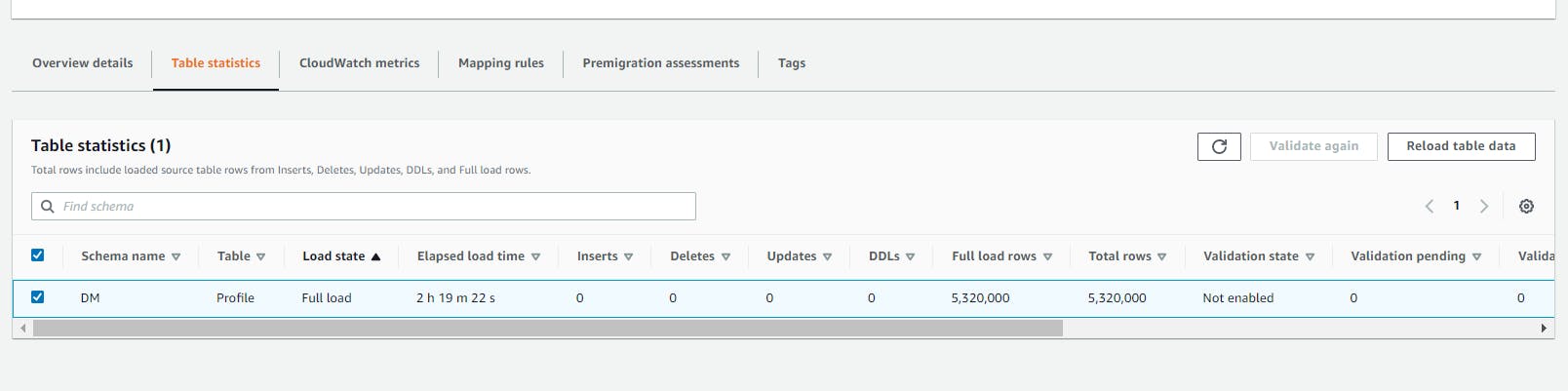

- Go to details of the task and under the table statistics, we can see all the progress by the table. One thing I noticed when I saw load state changed to the error just reload table data did the trick and completed the migration but we can check the logs in the cloudwatch.

- We can check the progress for each task and if there is a success it will show in the task list